Follow along on the Colab notebook

This quickstart guide will walk you through the process of using Supervised Fine Tuning to build a technical blog post generator. Specifically we will:

- Build a Pi Scoring System to quantify the "goodness" of our technical blog posts

- Use a prompt tuned model to generate blog posts and apply our Scoring System to evaluate their quality

- Use our Scoring System to filter a set of training data and input that data into a Supervised Fine Tuning run to train a model to generate blog posts in our desired style

Imagine you're building an AI application that generates technical blog posts from bullet points. As a fan of the Machine Learning Mastery blog, you want to create content that has that same clear, practical style with the perfect balance of explanation and code examples. To get started, we'll build a Pi Scoring system capable of evaluating some of your favorite things about the blog and then we'll move on to prompting a model to generate blog posts for us

Reading through some of your favorite posts on the Machine Learning Mastery blog, you notice a couple characteristics that you want to capture in your blog post generator. Namely, you love how the blog structures content in a very easy to parse fashion that still allows you to go deep on certain topics, and that the blog always includes code examples with explanations and potential risks to mitigate. Let's capture this in a Pi Scoring system to guide and evaluate our modeling efforts (see the full code notebook here)

- Content Structure:

- Visual breaks (images, code snippets, bullet points)

- Use of second person (addressing readers as "you")

- Inclusion of links to additional resources

- Consistent section headings throughout

- Technical Communication:

- Inclusion of relevant code examples

- Clear explanations of code snippets

- Identification of potential pitfalls or common mistakes

# @title Initialize the Pi scoring system from a JSON description

from withpi.types import Contract

blog_writer_scoring_system_json = """

{

"description": "A streamlined rubric for evaluating technical blog post quality",

"name": "Technical Blog Post Quality Assessment",

"dimensions": [

{

"description": "Evaluates the content structure of the blog post",

"label": "Content Structure",

"sub_dimensions": [

{

"description": "Are there visual breaks (images, code snippets, bullet points) to break up the text?",

"label": "Visual breaks",

"scoring_type": "PI_SCORER",

},

{

"description": "Does the blog post address the reader in second person (you, your etc.)?",

"label": "Second person",

"scoring_type": "PI_SCORER",

"weight": 1

},

{

"description": "Does the post include links to additional resources or references?",

"label": "Additional resources",

"scoring_type": "PI_SCORER",

"weight": 1

},

{

"description": "Are there consistent section headings throughout the post?",

"label": "Section headings",

"scoring_type": "PI_SCORER",

"weight": 1

}

],

"weight": 1

},

{

"description": "Evaluates the technical communication of the blog post",

"label": "Technical Communication",

"sub_dimensions": [

{

"description": "Are code examples included where relevant?",

"label": "Code inclusion",

"scoring_type": "PI_SCORER",

"weight": 1

},

{

"description": "Does the post explain the code snippets when they are included?",

"label": "Code explanation",

"scoring_type": "PI_SCORER",

"weight": 1

},

{

"description": "Does the post call out potential pitfalls or common mistakes?",

"label": "Pitfalls",

"scoring_type": "PI_SCORER",

"weight": 1

}

],

"weight": 1

}

]

}

"""

blog_writer_scoring_system = Contract.model_validate_json(blog_writer_scoring_system_json)Once you have a scoring system, the next step to take is to try prompting a model to generate your blog posts and see how well that works. You might use a basic prompt like

System Prompt:

"Given a topic, generate a technical blog post"

Example User Input:

"Exploring AutoML with TPOT: Streamlining Machine Learning Pipeline Creation and Model Deployment for Non-Technical Users"The Pi Prompt Playground (view example) makes it very easy to quickly test this out in a UI, and the Pi SDK allows us to quickly run this prompted model on a larger evaluation set of technical blog topics (view the code here)

Once we try it in a few scenarios, we quickly spot some issues:

- Our generator tends to make large walls of text, and it doesn't directly engage the reader with questions or other 2nd person statements

- You're not seeing many links to external resources or consistent use of headers to break up paragraphs

- The blog content is rarely accompanied by code blocks

.png)

You can see these scores on a handful of examples in the Prompt Playground (view example), and you're also able to use the SDK to compare how the generated blog posts score against actual blog posts from Machine Learning Mastery (view the code here)

.png)

Supervised Fine Tuning (SFT) is a great tool in scenarios where you have examples that represent exactly the behaviour you want to curate in your model. In our example, we are sitting on a treasure trove of example blog posts (View the HuggingFace dataset) so we're ready to see if teaching our model with these examples will yield better results than prompting the model.

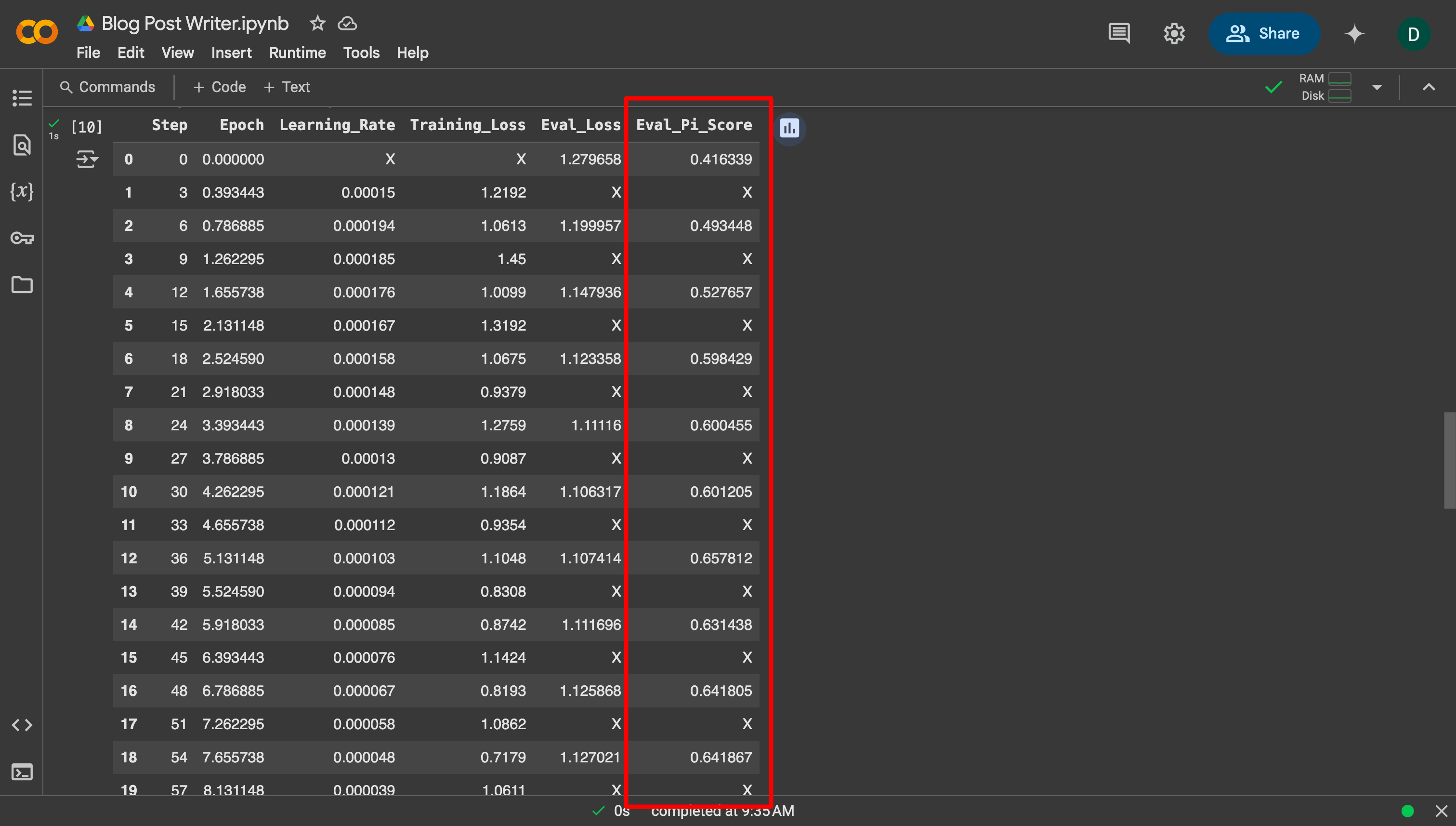

The Pi SDK allows you to run a full fine tuning job, complete with ongoing scoring with the Pi Scoring system, in just a few lines of code (see the full code here)

Standard SFT libraries just show you the Training and Validation losses. Essentially, how well is your model learning to emulate the examples, but that doesn't tell you whether the model is getting better for your application. The Pi SDK solves this problem. While your training is running, you can see live updates on how your model is performing against your scoring system.

.png)

The scores above show a marked quantitative improvement, but does our new model pass the eye test?

The linked code notebook has a section where you can manually scan the prompt generated vs fine tuned model generated posts and their scores side by side. We've brought in an example below to show just how much things have improved.

Example User Prompt: Implementing a Multi-Class Logistic Regression Classifier in PyTorch for MNIST Image Classification

Prompted model | Fine tuned model |

|---|---|

Total score 0.363 Content Structure 0.262 Visual breaks 0.047 Second person 0.0 Additional resources 0.0 Section headings 1.0 Technical Communication 0.464 Code inclusion 0.754 Code explanation 0.637 Pitfalls 0.0 | Total score 0.752 Content Structure 0.918 Visual breaks 0.871 Second person 0.949 Additional resources 0.852 Section headings 1.0 Technical Communication 0.586 Code inclusion 1.0 Code explanation 0.75 Pitfalls 0.009 |

Generated Response Implementing a Multi-Class Logistic Regression Classifier in PyTorch for MNIST Image Classification In this blog post, we will implement a multi-class logistic regression classifier in PyTorch for MNIST image classification. We will cover the basics of logistic regression, PyTorch implementation, and provide a step-by-step guide to train and evaluate the model. Logistic Regression Basics Logistic regression is a type of supervised learning algorithm used for binary classification problems. However, we can extend it to multi-class classification by using the one-vs-all approach, where we train a separate logistic regression model for each class. The output of each model is then used to compute the final class probabilities. PyTorch Implementation We will use PyTorch to implement the multi-class logistic regression classifier. We will define a custom PyTorch module for the logistic regression model and use the MNIST dataset for training and evaluation. Step 1: Install Required Libraries First, install the required libraries using pip: Import the required libraries and load the MNIST dataset: ```python import torch import torch.nn as nn import torchvision import torchvision.transforms as transforms Load MNIST datasettransform = transforms.Compose([transforms.ToTensor()]) trainset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform) trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True) testset = torchvision.datasets.MNIST(root='./data', train=False, download=True, transform=transform) testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False) ``` Step 3: Define Logistic Regression Model Define a custom PyTorch module for the logistic regression model: ```python class LogisticRegression(nn.Module): def init(self, num_classes): super(LogisticRegression, self).init() self.fc = nn.Linear(784, num_classes) def forward(self, x): ``` Step 4: Train the Model Train the model using the MNIST dataset: ```python Initialize model, loss function, and optimizermodel = LogisticRegression(num_classes=10) criterion = nn.CrossEntropyLoss() optimizer = torch.optim.SGD(model.parameters(), lr=0.01) Train the modelfor epoch in range(10): for i, (images, labels) in enumerate(trainloader): images, labels = images.to(device), labels.to(device) optimizer.zero_grad() outputs = model(images) loss = criterion(outputs, labels) loss.backward() optimizer.step() print('Epoch {}: Loss = {:.4f}'.format(epoch+1, loss.item())) ``` Step 5: Evaluate the Model Evaluate the model using the test dataset: ```python Evaluate the modelmodel.eval() test_loss = 0 correct = 0 with torch.no_grad(): for images, labels in testloader: images, labels = images.to(device), labels.to(device) outputs = model(images) loss = criterion(outputs, labels) test_loss += loss.item() _, predicted = torch.max(outputs, 1) correct += (predicted == labels).sum().item() accuracy = correct / len(testloader.dataset) print('Test Loss: {:.4f}, Accuracy: {:.2f}%'.format(test_loss / len(testloader), accuracy * 100)) ``` Conclusion In this blog post, we implemented a multi-class logistic regression classifier in PyTorch for MNIST image classification. We covered the basics of logistic regression, PyTorch implementation, and provided a step-by-step guide to train and evaluate the model. The code is available on GitHub for reference. | Generated Response Multi-Class Logistic Regression in PyTorchBy Adrian Tam on January 15, 2024 in Deep Learning with PyTorch In this tutorial, you will discover how to implement a multi-class logistic regression classifier in PyTorch for MNIST image classification. After completing this tutorial, you will know:

Kick-start your project with my book Deep Learning with PyTorch. It provides self-study tutorials with working code. Tutorial OverviewThis tutorial is divided into three parts; they are:

Multi-Class Logistic RegressionIn the previous tutorial, we learned how to implement a binary logistic regression classifier in PyTorch. In this tutorial, we will learn how to implement a multi-class logistic regression classifier in PyTorch. The multi-class logistic regression classifier is an extension of the binary logistic regression classifier. It is used to classify data into multiple classes. The multi-class logistic regression classifier uses the softmax activation function to output a probability distribution over all classes. The softmax activation function is defined as: softmax(x) = exp(x) / Σ exp(x) where x is the input to the softmax function, and exp(x) is the exponential function. The multi-class logistic regression classifier uses the cross-entropy loss function to measure the difference between the predicted probability distribution and the true probability distribution. The cross-entropy loss function is defined as: L(y, y_pred) = - Σ y[i] log(y_pred[i]) where y is the true probability distribution, y_pred is the predicted probability distribution, and i is the index of the class. Multi-Class Logistic Regression in PyTorchIn this section, we will learn how to implement a multi-class logistic regression classifier in PyTorch. We will use the MNIST dataset to train and evaluate the classifier. The MNIST dataset is a dataset of handwritten digits, and it is commonly used to train and evaluate image classification models. The MNIST dataset is divided into two parts: the training set and the testing set. The training set contains 60,000 images of handwritten digits, and the testing set contains 10,000 images of handwritten digits. We will use the PyTorch library to load the MNIST dataset and to implement the multi-class logistic regression classifier. ```python import torch import torch.nn as nn import torchvision import torchvision.transforms as transforms Load the MNIST datasettransform = transforms.Compose([transforms.ToTensor()]) trainset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform) trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True) testset = torchvision.datasets.MNIST(root='./data', train=False, download=True, transform=transform) testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False) ``` We will use the PyTorch library to implement the multi-class logistic regression classifier. ```python class LogisticRegression(nn.Module): def init(self): super(LogisticRegression, self).init() self.fc = nn.Linear(784, 10) def forward(self, x): ``` We will use the cross-entropy loss function to measure the difference between the predicted probability distribution and the true probability distribution.

We will use the stochastic gradient descent (SGD) optimizer to update the weights of the classifier.

We will train the classifier for 10 epochs.

We will evaluate the classifier on the testing set.

Making Predictions on the MNIST DatasetIn this section, we will learn how to use the multi-class logistic regression classifier to make predictions on the MNIST dataset. We will use the PyTorch library to load the MNIST dataset.

We will use the multi-class logistic regression classifier to make predictions on the testing set.

Further ReadingThis section provides more resources on the topic if you are looking to go deeper. SummaryIn this tutorial, you learned:

Specifically, you learned how to:

Get a copy of the code from the book Deep Learning with PyTorch. See This Tutorial in ActionThe following code is a complete example of the tutorial: ```python import torch import torch.nn as nn import torchvision import torchvision.transforms as transforms Load the MNIST datasettransform = transforms.Compose([transforms.ToTensor()]) trainset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform) trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True) testset = torchvision.datasets.MNIST(root='./data', train=False, download=True, transform=transform) testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False) Implement the multi-class logistic regression classifierclass LogisticRegression(nn.Module): def init(self): super(LogisticRegression, self).init() self.fc = nn.Linear(784, 10) def forward(self, x): Initialize the classifiermodel = LogisticRegression() Initialize the cross-entropy loss functioncriterion = nn.CrossEntropyLoss() Initialize the stochastic gradient descent (SGD) optimizeroptimizer = torch.optim.SGD(model.fc.parameters(), lr=0.01, momentum=0.9) Train the classifier for 10 epochsfor epoch in range(10): running_loss = 0.0 for i, data in enumerate(trainloader, 0): inputs, labels = data optimizer.zero_grad() outputs = model(inputs) loss = criterion(outputs, labels) loss.backward() optimizer.step() running_loss += loss.item() print('Epoch %d, Loss: %.3f' % (epoch+1, running_loss/(i+1))) Evaluate the classifier on the testing setmodel.eval() test_loss = 0 correct = 0 with torch.no_grad(): for data in testloader: inputs, labels = data outputs = model(inputs) loss = criterion(outputs, labels) test_loss += loss.item() _, predicted = torch.max(outputs.data, 1) correct += (predicted == labels).sum().item() accuracy = correct / len(testloader.dataset) print('Test Loss: %.3f, Accuracy: %.2f%%' % (test_loss/len(testloader), accuracy*100)) Use the classifier to make predictions on the MNIST datasetmodel.eval() with torch.no_grad(): for data in testloader: inputs, labels = data outputs = model(inputs) _, predicted = torch.max(outputs.data, 1) print('Predicted:', predicted) print('Actual:', labels) ``` |

Now we've seen how, with just a few lines of code, the Pi SDK enabled us to try Supervised Fine Tuning to dramatically improve our Blog Post Generator. Get started trying our Fine Tuning SDK or try it out in a UI today!